New Reality Engine

- Date

- Spring 2008 - Summer 2009

- Tech

- C++, OpenGL, Win32, GLSL

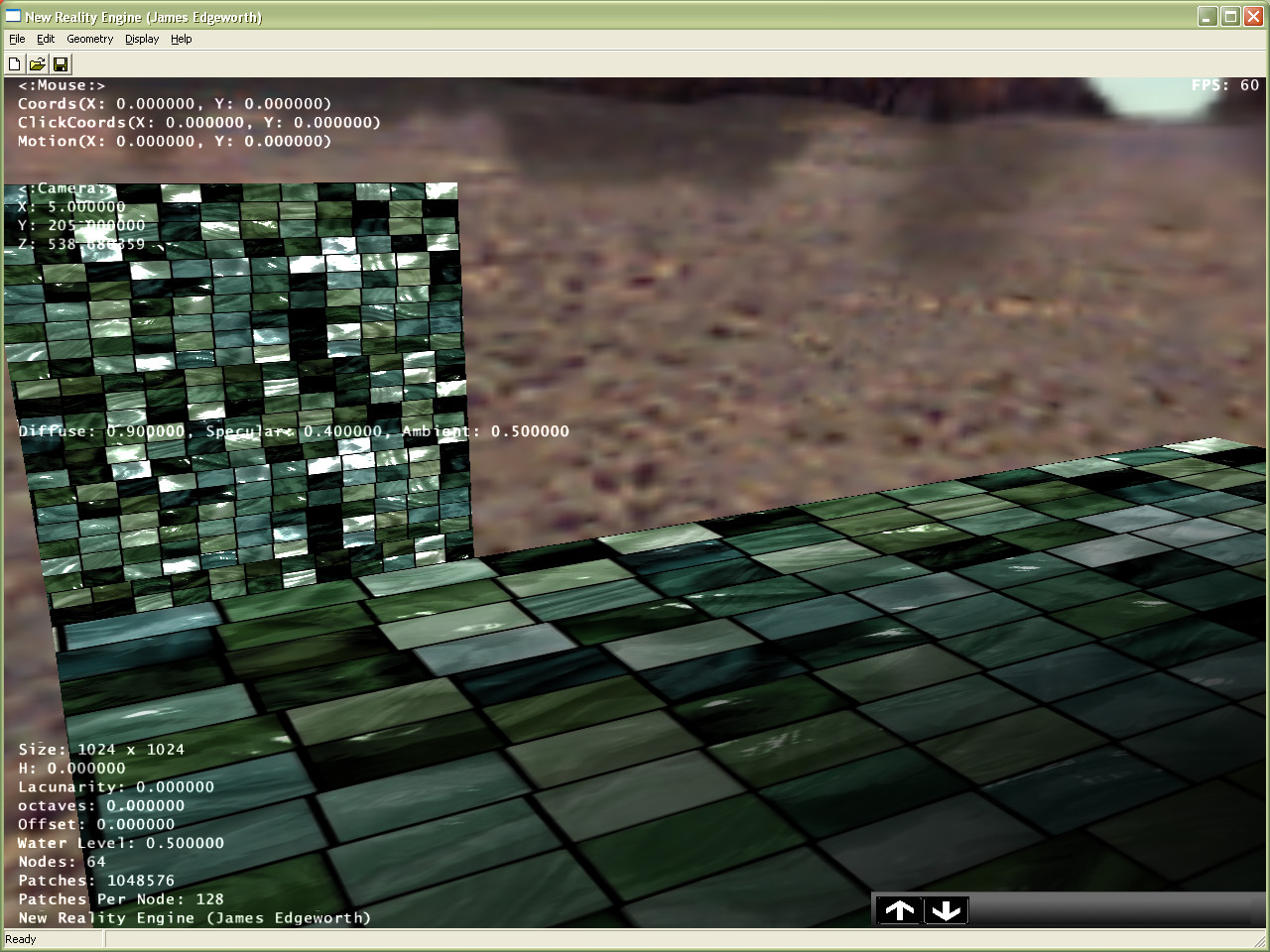

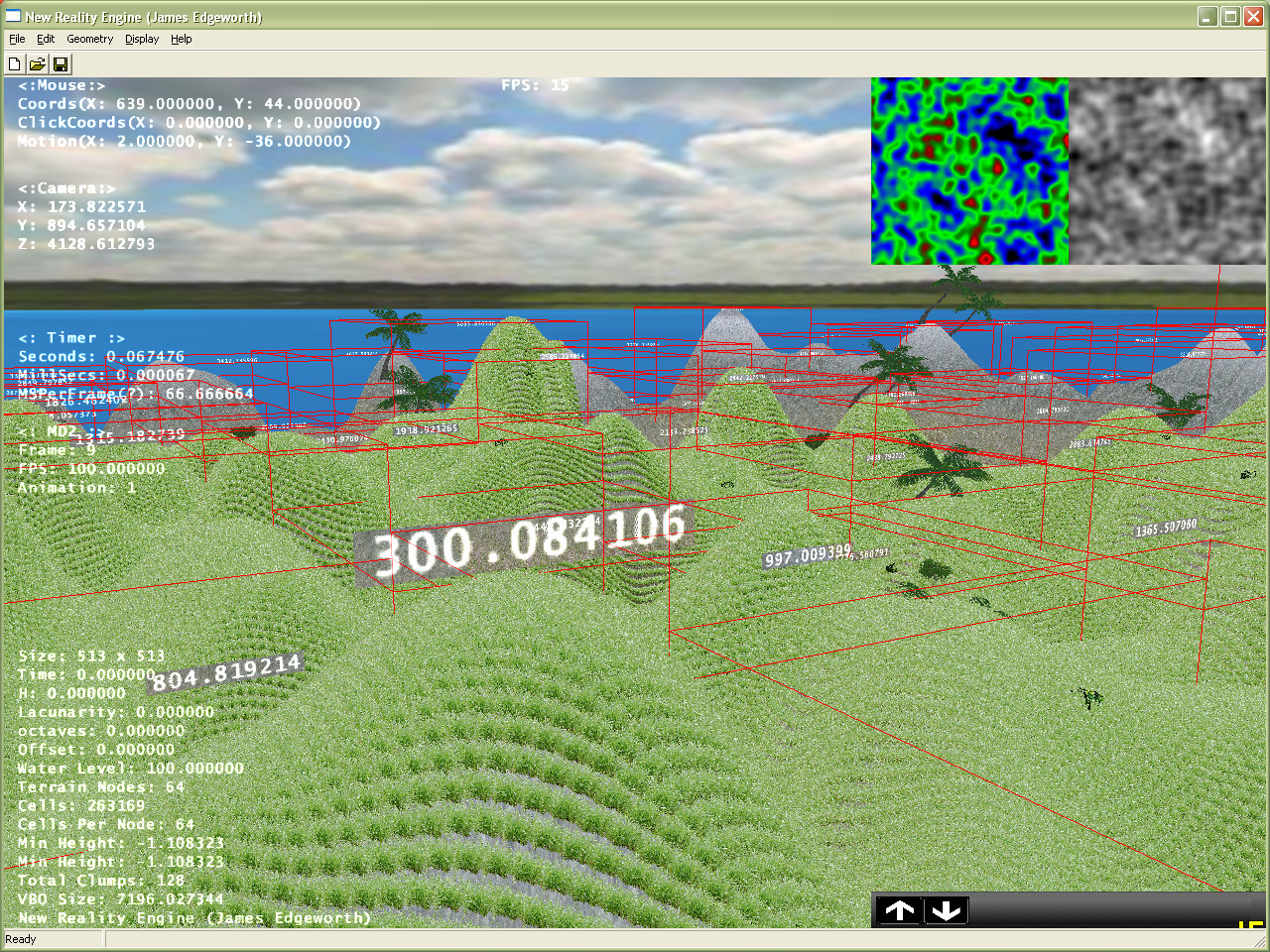

Procedural Terrain

My dissertation was on procedural terrain generation, and experimenting with multi-fractals to generate the most realistic terrain possible.

Personally I wouldn't call the results realistic, and I ventured way off target with this. Multi-texturing, foliage, skydomes, water, cubemapping and geomipmapping weren't part of the dissertation.

There were a number of algorithms used. The fault line algorithm was very easy to implement, but resulted in very "single octave" results. Multifractals was by far the most realistic, but needed an extremely large terrain size and very slight tweaks to the noise values to get the best results.

I had other ideas to enhance the algorithms, such as varying the heights of certain points based on their generated height (so rocky surfaces were more jagged and detailed) but utimately, multifractals are meant to be doing that anyway.

Geomipmapping

Building this on a basic HP laptop was quite the challenge given the limitations of an AMD GPU. I _had_ to stick Geomipmapping in there, if only to prevent the laptop cooking itself to death.

Of all the engine, this was probably _the_ feature of the engine I remember the most. I was at a friends house for the weekend, and pretty much the entire time - including overnight - was spent trying to get this algorithm working.

The concept is simple - the terrain is split into predictable chunks, and the further away the chunk is, the less detail is rendered. Easy, until you have to cater for the seams between the chunks - the chunk with higher detail must skip every other vertex along the very edges so the terrain itself does not have gaps. This went well for the top, and right edges, but the bottom and left edges took more time to solve than the entire rest of the algorithm.

This was written using immediate mode rendering, and later switched to Vertex Buffer Objects. This would have been a fairly easy task had it not been for the mip-mapping chunks, but the solution was ultimately to send the entire terrain as one VBO, and to send each chunk as index buffer objects. The performance enhancement was staggering, and at least meant I could work with much larger terrain sizes and better draw distances.

Each mipmapping chunk would also have worked well with octrees, but I didn't get that far.

Model Loading

MD2 was an easy file format to work with, and was the same process as writing the Quake BSP reader. Again, the biggest hurdle was wrapping my head around the specifics of the data types.

The models also included their keyframes, and the engine could animate them as intended, using keyframe interpolation. This particular model was used in one of the uni assignments with extremely basic AI, to get one to chase the other - the one being chased would roam around, then do it's jump animation when the other got too close, then would run in any direction away from the chaser.

Shaders

For a long while, shaders are a section of the programming books I skimmed over simply because the examples given were how to colour a teapot, or do sharper lighting. Then I tried it, and so many burning questions about the engine were answered - what seemed like absolute magic on Half Life 2 was now just a lighting shader.

Most of graphics engines are clever hacks to get things looking visually impressive. We pass buffer objects to the GPU, and tell it what shader to use to render it with. For a while, shaders were built into the library, until the programmable pipeline, which allowed graphics engines to become considerably more powerul, and with much less computational overhead.

Probably the litmus test of shaders is simple normal mapping, and I could never get over how the tiny, under-powered GPU in the laptop was capable of effortlessly rendering surprising results thanks to shaders (I wish I had the other screenshots here).

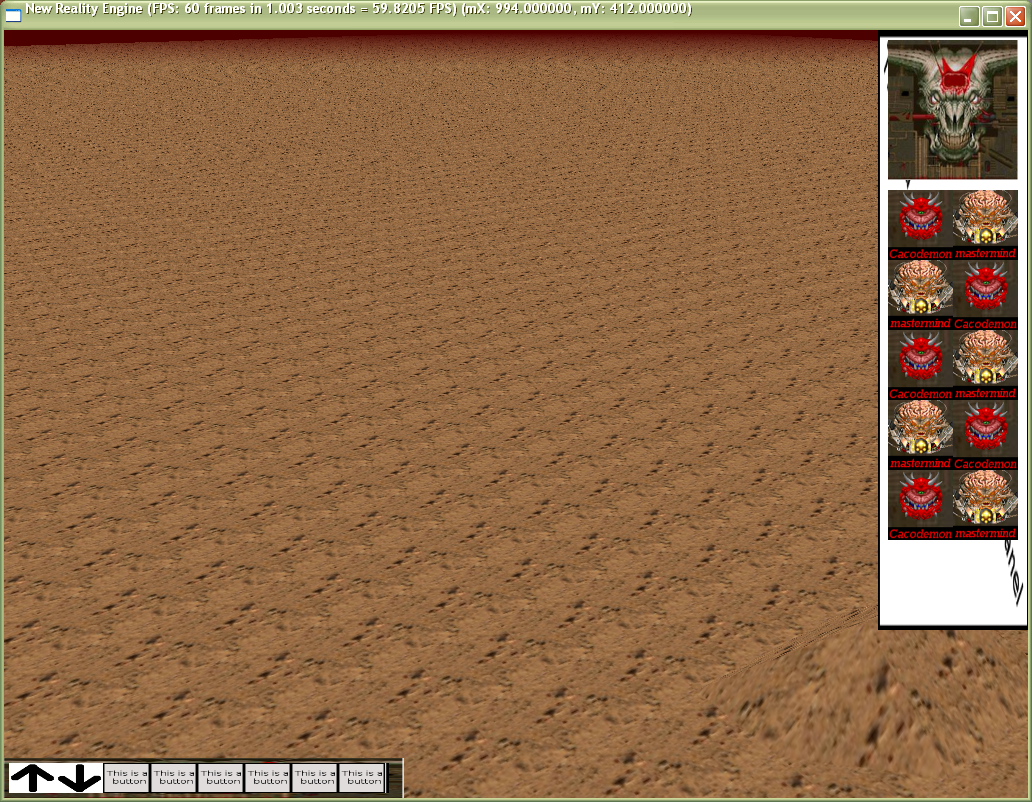

DoomRTS

This was the first "attempt" at putting the engine to good use. It was going to be an isometric RTS game similar in visuals to Red Alert 2.

The human units would of course carry the different guns you'd get in the actual game. The enemies would be the different demon characters. One of the demon superweapons would have been the Icon of Sin, randomly spawning enemies as he would in the actual game - we hadn't thought of one for the humans.

We obviously would not have sold this game, but used it at most as a demo of what we could do, and maybe as a way of giving ID Software a nudge, but this screenshot is really about as far as it went.

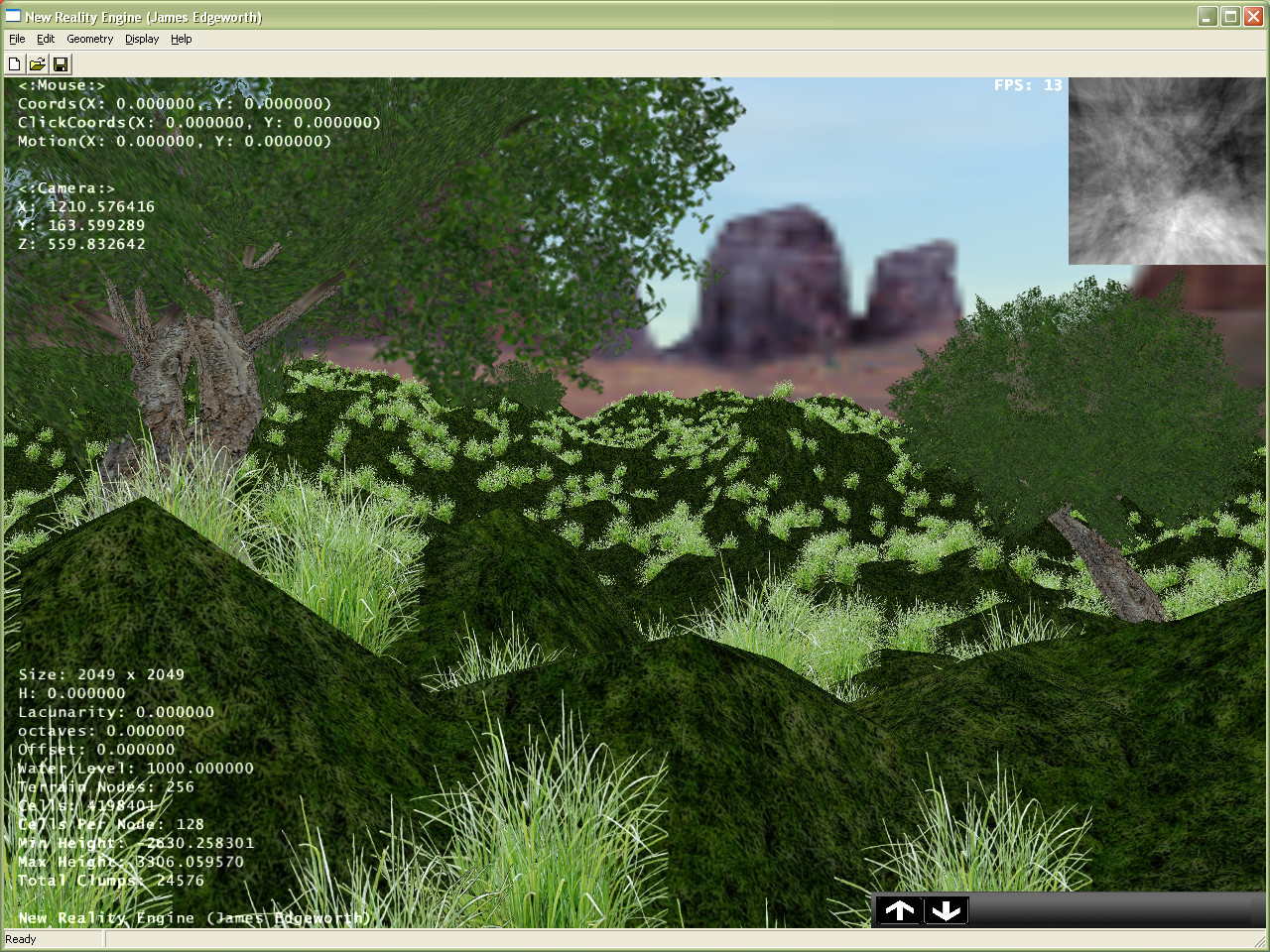

Foliage

I got really into this, having seen plenty of screenshots of lush foliage in various game worlds and GPU programming books.

The most common iteration was to place thousands of billboards with grass images on to represent widespread foliage. The GPU could then be used to manipulate the vertices to give the impression of foliage reacting to wind.

Trees cannot realistically be represented by sprites (unless they're far away from the player) so they were 3D models.

GPU Gems 2 mentioned a technique of rendering the 3D models to framebuffer objects, and then placing loads of these FBO textures in the distance to represent hundreds of objects represented by only one model instance. I didn't get that far though!

In the XNA version of the engine, I implemented Kevin Boulanger's procedural grass, which does an excellent job of drawing grass with minimal vertices.

Anything else..

Skyboxes, Skydomes, animated grass, collision detection, and animated models (with very, very basic AI). I have the codebase, but it was written on Windows XP.